number

int64 2

7.91k

| title

stringlengths 1

290

| body

stringlengths 0

228k

| state

stringclasses 2

values | created_at

timestamp[s]date 2020-04-14 18:18:51

2025-12-16 10:45:02

| updated_at

timestamp[s]date 2020-04-29 09:23:05

2025-12-16 19:34:46

| closed_at

timestamp[s]date 2020-04-29 09:23:05

2025-12-16 14:20:48

⌀ | url

stringlengths 48

51

| author

stringlengths 3

26

⌀ | comments_count

int64 0

70

| labels

listlengths 0

4

|

|---|---|---|---|---|---|---|---|---|---|---|

5,568

|

dataset.to_iterable_dataset() loses useful info like dataset features

|

### Describe the bug

Hello,

I like the new `to_iterable_dataset` feature but I noticed something that seems to be missing.

When using `to_iterable_dataset` to transform your map style dataset into iterable dataset, you lose valuable metadata like the features.

These metadata are useful if you want to interleave iterable datasets, cast columns etc.

### Steps to reproduce the bug

```python

dataset = load_dataset("lhoestq/demo1")["train"]

print(dataset.features)

# {'id': Value(dtype='string', id=None), 'package_name': Value(dtype='string', id=None), 'review': Value(dtype='string', id=None), 'date': Value(dtype='string', id=None), 'star': Value(dtype='int64', id=None), 'version_id': Value(dtype='int64', id=None)}

dataset = dataset.to_iterable_dataset()

print(dataset.features)

# None

```

### Expected behavior

Keep the relevant information

### Environment info

datasets==2.10.0

|

CLOSED

| 2023-02-23T13:45:33

| 2023-02-24T13:22:36

| 2023-02-24T13:22:36

|

https://github.com/huggingface/datasets/issues/5568

|

bruno-hays

| 3

|

[

"enhancement",

"good first issue"

] |

5,566

|

Directly reading parquet files in a s3 bucket from the load_dataset method

|

### Feature request

Right now, we have to read the get the parquet file to the local storage. So having ability to read given the bucket directly address would be benificial

### Motivation

In a production set up, this feature can help us a lot. So we do not need move training datafiles in between storage.

### Your contribution

I am willing to help if there's anyway.

|

OPEN

| 2023-02-22T22:13:40

| 2023-02-23T11:03:29

| null |

https://github.com/huggingface/datasets/issues/5566

|

shamanez

| 1

|

[

"duplicate",

"enhancement"

] |

5,555

|

`.shuffle` throwing error `ValueError: Protocol not known: parent`

|

### Describe the bug

```

---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

Cell In [16], line 1

----> 1 train_dataset = train_dataset.shuffle()

File /opt/conda/envs/pytorch/lib/python3.9/site-packages/datasets/arrow_dataset.py:551, in transmit_format.<locals>.wrapper(*args, **kwargs)

544 self_format = {

545 "type": self._format_type,

546 "format_kwargs": self._format_kwargs,

547 "columns": self._format_columns,

548 "output_all_columns": self._output_all_columns,

549 }

550 # apply actual function

--> 551 out: Union["Dataset", "DatasetDict"] = func(self, *args, **kwargs)

552 datasets: List["Dataset"] = list(out.values()) if isinstance(out, dict) else [out]

553 # re-apply format to the output

File /opt/conda/envs/pytorch/lib/python3.9/site-packages/datasets/fingerprint.py:480, in fingerprint_transform.<locals>._fingerprint.<locals>.wrapper(*args, **kwargs)

476 validate_fingerprint(kwargs[fingerprint_name])

478 # Call actual function

--> 480 out = func(self, *args, **kwargs)

482 # Update fingerprint of in-place transforms + update in-place history of transforms

484 if inplace: # update after calling func so that the fingerprint doesn't change if the function fails

File /opt/conda/envs/pytorch/lib/python3.9/site-packages/datasets/arrow_dataset.py:3616, in Dataset.shuffle(self, seed, generator, keep_in_memory, load_from_cache_file, indices_cache_file_name, writer_batch_size, new_fingerprint)

3610 return self._new_dataset_with_indices(

3611 fingerprint=new_fingerprint, indices_cache_file_name=indices_cache_file_name

3612 )

3614 permutation = generator.permutation(len(self))

-> 3616 return self.select(

3617 indices=permutation,

3618 keep_in_memory=keep_in_memory,

3619 indices_cache_file_name=indices_cache_file_name if not keep_in_memory else None,

3620 writer_batch_size=writer_batch_size,

3621 new_fingerprint=new_fingerprint,

3622 )

File /opt/conda/envs/pytorch/lib/python3.9/site-packages/datasets/arrow_dataset.py:551, in transmit_format.<locals>.wrapper(*args, **kwargs)

544 self_format = {

545 "type": self._format_type,

546 "format_kwargs": self._format_kwargs,

547 "columns": self._format_columns,

548 "output_all_columns": self._output_all_columns,

549 }

550 # apply actual function

--> 551 out: Union["Dataset", "DatasetDict"] = func(self, *args, **kwargs)

552 datasets: List["Dataset"] = list(out.values()) if isinstance(out, dict) else [out]

553 # re-apply format to the output

File /opt/conda/envs/pytorch/lib/python3.9/site-packages/datasets/fingerprint.py:480, in fingerprint_transform.<locals>._fingerprint.<locals>.wrapper(*args, **kwargs)

476 validate_fingerprint(kwargs[fingerprint_name])

478 # Call actual function

--> 480 out = func(self, *args, **kwargs)

482 # Update fingerprint of in-place transforms + update in-place history of transforms

484 if inplace: # update after calling func so that the fingerprint doesn't change if the function fails

File /opt/conda/envs/pytorch/lib/python3.9/site-packages/datasets/arrow_dataset.py:3266, in Dataset.select(self, indices, keep_in_memory, indices_cache_file_name, writer_batch_size, new_fingerprint)

3263 return self._select_contiguous(start, length, new_fingerprint=new_fingerprint)

3265 # If not contiguous, we need to create a new indices mapping

-> 3266 return self._select_with_indices_mapping(

3267 indices,

3268 keep_in_memory=keep_in_memory,

3269 indices_cache_file_name=indices_cache_file_name,

3270 writer_batch_size=writer_batch_size,

3271 new_fingerprint=new_fingerprint,

3272 )

File /opt/conda/envs/pytorch/lib/python3.9/site-packages/datasets/arrow_dataset.py:551, in transmit_format.<locals>.wrapper(*args, **kwargs)

544 self_format = {

545 "type": self._format_type,

546 "format_kwargs": self._format_kwargs,

547 "columns": self._format_columns,

548 "output_all_columns": self._output_all_columns,

549 }

550 # apply actual function

--> 551 out: Union["Dataset", "DatasetDict"] = func(self, *args, **kwargs)

552 datasets: List["Dataset"] = list(out.values()) if isinstance(out, dict) else [out]

553 # re-apply format to the output

File /opt/conda/envs/pytorch/lib/python3.9/site-packages/datasets/fingerprint.py:480, in fingerprint_transform.<locals>._fingerprint.<locals>.wrapper(*args, **kwargs)

476 validate_fingerprint(kwargs[fingerprint_name])

478 # Call actual function

--> 480 out = func(self, *args, **kwargs)

482 # Update fingerprint of in-place transforms + update in-place history of transforms

484 if inplace: # update after calling func so that the fingerprint doesn't change if the function fails

File /opt/conda/envs/pytorch/lib/python3.9/site-packages/datasets/arrow_dataset.py:3389, in Dataset._select_with_indices_mapping(self, indices, keep_in_memory, indices_cache_file_name, writer_batch_size, new_fingerprint)

3387 logger.info(f"Caching indices mapping at {indices_cache_file_name}")

3388 tmp_file = tempfile.NamedTemporaryFile("wb", dir=os.path.dirname(indices_cache_file_name), delete=False)

-> 3389 writer = ArrowWriter(

3390 path=tmp_file.name, writer_batch_size=writer_batch_size, fingerprint=new_fingerprint, unit="indices"

3391 )

3393 indices = indices if isinstance(indices, list) else list(indices)

3395 size = len(self)

File /opt/conda/envs/pytorch/lib/python3.9/site-packages/datasets/arrow_writer.py:315, in ArrowWriter.__init__(self, schema, features, path, stream, fingerprint, writer_batch_size, hash_salt, check_duplicates, disable_nullable, update_features, with_metadata, unit, embed_local_files, storage_options)

312 self._disable_nullable = disable_nullable

314 if stream is None:

--> 315 fs_token_paths = fsspec.get_fs_token_paths(path, storage_options=storage_options)

316 self._fs: fsspec.AbstractFileSystem = fs_token_paths[0]

317 self._path = (

318 fs_token_paths[2][0]

319 if not is_remote_filesystem(self._fs)

320 else self._fs.unstrip_protocol(fs_token_paths[2][0])

321 )

File /opt/conda/envs/pytorch/lib/python3.9/site-packages/fsspec/core.py:593, in get_fs_token_paths(urlpath, mode, num, name_function, storage_options, protocol, expand)

591 else:

592 urlpath = stringify_path(urlpath)

--> 593 chain = _un_chain(urlpath, storage_options or {})

594 if len(chain) > 1:

595 inkwargs = {}

File /opt/conda/envs/pytorch/lib/python3.9/site-packages/fsspec/core.py:330, in _un_chain(path, kwargs)

328 for bit in reversed(bits):

329 protocol = split_protocol(bit)[0] or "file"

--> 330 cls = get_filesystem_class(protocol)

331 extra_kwargs = cls._get_kwargs_from_urls(bit)

332 kws = kwargs.get(protocol, {})

File /opt/conda/envs/pytorch/lib/python3.9/site-packages/fsspec/registry.py:240, in get_filesystem_class(protocol)

238 if protocol not in registry:

239 if protocol not in known_implementations:

--> 240 raise ValueError("Protocol not known: %s" % protocol)

241 bit = known_implementations[protocol]

242 try:

ValueError: Protocol not known: parent

```

This is what the `train_dataset` object looks like

```

Dataset({

features: ['label', 'input_ids', 'attention_mask'],

num_rows: 364166

})

```

### Steps to reproduce the bug

The `train_dataset` obj is created by concatenating two datasets

And then shuffle is called, but it throws the mentioned error.

### Expected behavior

Should shuffle the dataset properly.

### Environment info

- `datasets` version: 2.6.1

- Platform: Linux-5.15.0-1022-aws-x86_64-with-glibc2.31

- Python version: 3.9.13

- PyArrow version: 10.0.0

- Pandas version: 1.4.4

|

OPEN

| 2023-02-20T21:33:45

| 2023-02-27T09:23:34

| null |

https://github.com/huggingface/datasets/issues/5555

|

prabhakar267

| 4

|

[] |

5,548

|

Apply flake8-comprehensions to codebase

|

### Feature request

Apply ruff flake8 comprehension checks to codebase.

### Motivation

This should strictly improve the performance / readability of the codebase by removing unnecessary iteration, function calls, etc. This should generate better Python bytecode which should strictly improve performance.

I already applied this fixes to PyTorch and Sympy with little issue and have opened PRs to diffusers and transformers todo this as well.

### Your contribution

Making a PR.

|

CLOSED

| 2023-02-19T20:05:38

| 2023-02-23T13:59:41

| 2023-02-23T13:59:41

|

https://github.com/huggingface/datasets/issues/5548

|

Skylion007

| 0

|

[

"enhancement"

] |

5,546

|

Downloaded datasets do not cache at $HF_HOME

|

### Describe the bug

In the huggingface course (https://huggingface.co/course/chapter3/2?fw=pt) it said that if we set HF_HOME, downloaded datasets would be cached at specified address but it does not. downloaded models from checkpoint names are downloaded and cached at HF_HOME but this is not the case for datasets, they are still cached at ~/.cache/huggingface/datasets.

### Steps to reproduce the bug

Run the following code

```

from datasets import load_dataset

raw_datasets = load_dataset("glue", "mrpc")

raw_datasets

```

it downloads and store dataset at ~/.cache/huggingface/datasets

### Expected behavior

to cache dataset at HF_HOME.

### Environment info

python 3.10.6

Kubuntu 22.04

HF_HOME located on a separate partition

|

CLOSED

| 2023-02-18T13:30:35

| 2023-07-24T14:22:43

| 2023-07-24T14:22:43

|

https://github.com/huggingface/datasets/issues/5546

|

ErfanMoosaviMonazzah

| 1

|

[] |

5,543

|

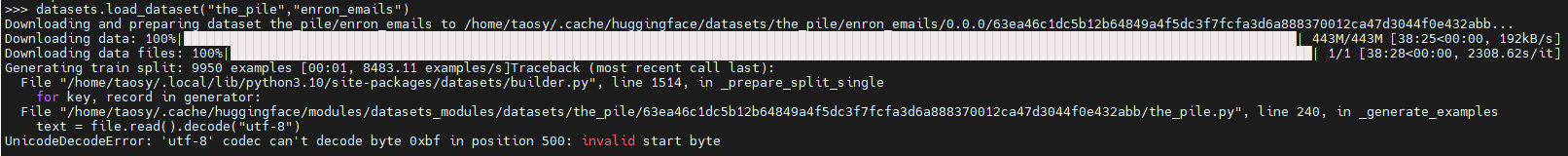

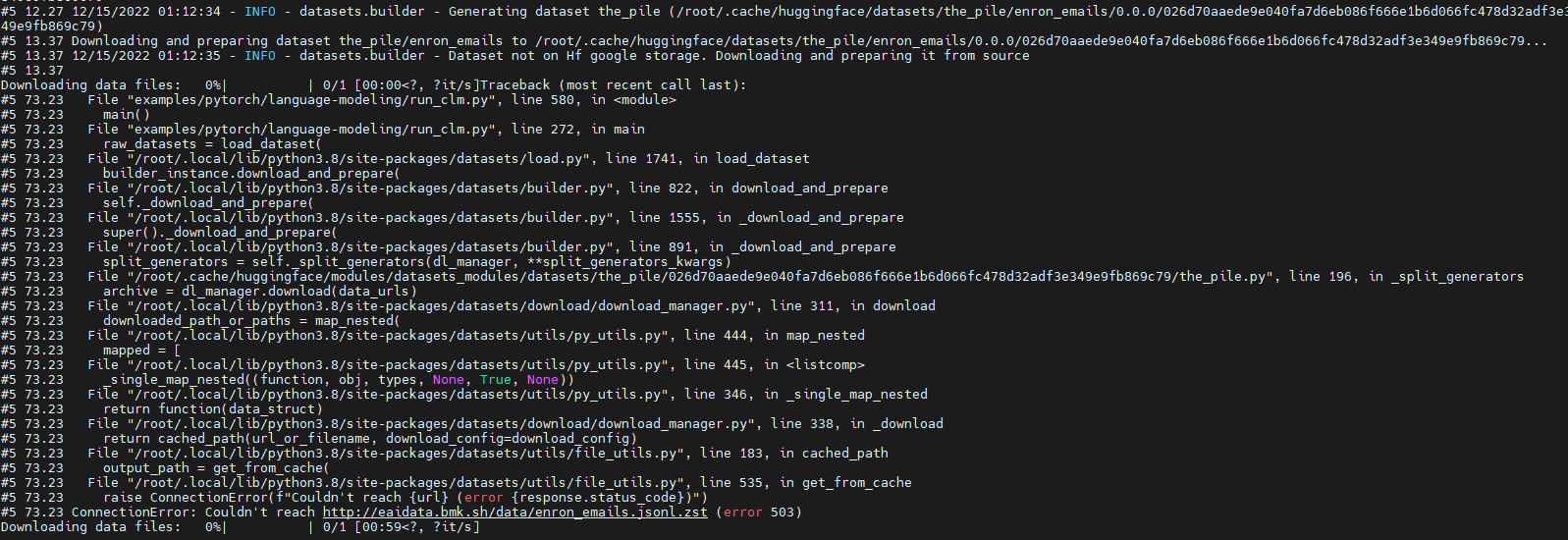

the pile datasets url seems to change back

|

### Describe the bug

in #3627, the host url of the pile dataset became `https://mystic.the-eye.eu`. Now the new url is broken, but `https://the-eye.eu` seems to work again.

### Steps to reproduce the bug

```python3

from datasets import load_dataset

dataset = load_dataset("bookcorpusopen")

```

shows

```python3

ConnectionError: Couldn't reach https://mystic.the-eye.eu/public/AI/pile_preliminary_components/books1.tar.gz (ProxyError(MaxRetryError("HTTPSConnectionPool(host='mystic.the-eye.eu', port=443): Max retries exceeded with url: /public/AI/pile_pr

eliminary_components/books1.tar.gz (Caused by ProxyError('Cannot connect to proxy.', OSError('Tunnel connection failed: 504 Gateway Timeout')))")))

```

### Expected behavior

Downloading as normal.

### Environment info

- `datasets` version: 2.9.0

- Platform: Linux-5.4.143.bsk.7-amd64-x86_64-with-glibc2.31

- Python version: 3.9.2

- PyArrow version: 6.0.1

- Pandas version: 1.5.3

|

CLOSED

| 2023-02-17T08:40:11

| 2023-02-21T06:37:00

| 2023-02-20T08:41:33

|

https://github.com/huggingface/datasets/issues/5543

|

wjfwzzc

| 2

|

[] |

5,541

|

Flattening indices in selected datasets is extremely inefficient

|

### Describe the bug

If we perform a `select` (or `shuffle`, `train_test_split`, etc.) operation on a dataset , we end up with a dataset with an `indices_table`. Currently, flattening such dataset consumes a lot of memory and the resulting flat dataset contains ChunkedArrays with as many chunks as there are rows. This is extremely inefficient and slows down the operations on the flat dataset, e.g., saving/loading the dataset to disk becomes really slow.

Perhaps more importantly, loading the dataset back from disk basically loads the whole table into RAM, as it cannot take advantage of memory mapping.

### Steps to reproduce the bug

The following script reproduces the issue:

```python

import gc

import os

import psutil

import tempfile

import time

from datasets import Dataset

DATASET_SIZE = 5000000

def profile(func):

def wrapper(*args, **kwargs):

mem_before = psutil.Process(os.getpid()).memory_info().rss / (1024 * 1024)

start = time.time()

# Run function here

out = func(*args, **kwargs)

end = time.time()

mem_after = psutil.Process(os.getpid()).memory_info().rss / (1024 * 1024)

print(f"{func.__name__} -- RAM memory used: {mem_after - mem_before} MB -- Total time: {end - start:.6f} s")

return out

return wrapper

def main():

ds = Dataset.from_list([{'col': i} for i in range(DATASET_SIZE)])

print(f"Num chunks for original ds: {ds.data['col'].num_chunks}")

with tempfile.TemporaryDirectory() as tmpdir:

path1 = os.path.join(tmpdir, 'ds1')

print("Original ds save/load")

profile(ds.save_to_disk)(path1)

ds_loaded = profile(Dataset.load_from_disk)(path1)

print(f"Num chunks for original ds after reloading: {ds_loaded.data['col'].num_chunks}")

print("")

ds_select = ds.select(reversed(range(len(ds))))

print(f"Num chunks for selected ds: {ds_select.data['col'].num_chunks}")

del ds

del ds_loaded

gc.collect()

# This would happen anyway when we call save_to_disk

ds_select = profile(ds_select.flatten_indices)()

print(f"Num chunks for selected ds after flattening: {ds_select.data['col'].num_chunks}")

print("")

path2 = os.path.join(tmpdir, 'ds2')

print("Selected ds save/load")

profile(ds_select.save_to_disk)(path2)

del ds_select

gc.collect()

ds_select_loaded = profile(Dataset.load_from_disk)(path2)

print(f"Num chunks for selected ds after reloading: {ds_select_loaded.data['col'].num_chunks}")

if __name__ == '__main__':

main()

```

Sample result:

```

Num chunks for original ds: 1

Original ds save/load

save_to_disk -- RAM memory used: 0.515625 MB -- Total time: 0.253888 s

load_from_disk -- RAM memory used: 42.765625 MB -- Total time: 0.015176 s

Num chunks for original ds after reloading: 5000

Num chunks for selected ds: 1

flatten_indices -- RAM memory used: 4852.609375 MB -- Total time: 46.116774 s

Num chunks for selected ds after flattening: 5000000

Selected ds save/load

save_to_disk -- RAM memory used: 1326.65625 MB -- Total time: 42.309825 s

load_from_disk -- RAM memory used: 2085.953125 MB -- Total time: 11.659137 s

Num chunks for selected ds after reloading: 5000000

```

### Expected behavior

Saving/loading the dataset should be much faster and consume almost no extra memory thanks to pyarrow memory mapping.

### Environment info

- `datasets` version: 2.9.1.dev0

- Platform: macOS-13.1-arm64-arm-64bit

- Python version: 3.10.8

- PyArrow version: 11.0.0

- Pandas version: 1.5.3

|

CLOSED

| 2023-02-17T01:52:24

| 2023-02-22T13:15:20

| 2023-02-17T11:12:33

|

https://github.com/huggingface/datasets/issues/5541

|

marioga

| 3

|

[] |

5,539

|

IndexError: invalid index of a 0-dim tensor. Use `tensor.item()` in Python or `tensor.item<T>()` in C++ to convert a 0-dim tensor to a number

|

### Describe the bug

When dataset contains a 0-dim tensor, formatting.py raises a following error and fails.

```bash

Traceback (most recent call last):

File "<path>/lib/python3.8/site-packages/datasets/formatting/formatting.py", line 501, in format_row

return _unnest(formatted_batch)

File "<path>/lib/python3.8/site-packages/datasets/formatting/formatting.py", line 137, in _unnest

return {key: array[0] for key, array in py_dict.items()}

File "<path>/lib/python3.8/site-packages/datasets/formatting/formatting.py", line 137, in <dictcomp>

return {key: array[0] for key, array in py_dict.items()}

IndexError: invalid index of a 0-dim tensor. Use `tensor.item()` in Python or `tensor.item<T>()` in C++ to convert a 0-dim tensor to a number

```

### Steps to reproduce the bug

Load whichever dataset and add transform method to add 0-dim tensor. Or create/find a dataset containing 0-dim tensor. E.g.

```python

from datasets import load_dataset

import torch

dataset = load_dataset("lambdalabs/pokemon-blip-captions", split='train')

def t(batch):

return {"test": torch.tensor(1)}

dataset.set_transform(t)

d_0 = dataset[0]

```

### Expected behavior

Extractor will correctly get a row from the dataset, even if it contains 0-dim tensor.

### Environment info

`datasets==2.8.0`, but it looks like it is also applicable to main branch version (as of 16th February)

|

CLOSED

| 2023-02-16T16:08:51

| 2023-02-22T10:30:30

| 2023-02-21T13:03:57

|

https://github.com/huggingface/datasets/issues/5539

|

aalbersk

| 4

|

[

"good first issue"

] |

5,538

|

load_dataset in seaborn is not working for me. getting this error.

|

TimeoutError Traceback (most recent call last)

~\anaconda3\lib\urllib\request.py in do_open(self, http_class, req, **http_conn_args)

1345 try:

-> 1346 h.request(req.get_method(), req.selector, req.data, headers,

1347 encode_chunked=req.has_header('Transfer-encoding'))

~\anaconda3\lib\http\client.py in request(self, method, url, body, headers, encode_chunked)

1278 """Send a complete request to the server."""

-> 1279 self._send_request(method, url, body, headers, encode_chunked)

1280

~\anaconda3\lib\http\client.py in _send_request(self, method, url, body, headers, encode_chunked)

1324 body = _encode(body, 'body')

-> 1325 self.endheaders(body, encode_chunked=encode_chunked)

1326

~\anaconda3\lib\http\client.py in endheaders(self, message_body, encode_chunked)

1273 raise CannotSendHeader()

-> 1274 self._send_output(message_body, encode_chunked=encode_chunked)

1275

~\anaconda3\lib\http\client.py in _send_output(self, message_body, encode_chunked)

1033 del self._buffer[:]

-> 1034 self.send(msg)

1035

~\anaconda3\lib\http\client.py in send(self, data)

973 if self.auto_open:

--> 974 self.connect()

975 else:

~\anaconda3\lib\http\client.py in connect(self)

1440

-> 1441 super().connect()

1442

~\anaconda3\lib\http\client.py in connect(self)

944 """Connect to the host and port specified in __init__."""

--> 945 self.sock = self._create_connection(

946 (self.host,self.port), self.timeout, self.source_address)

~\anaconda3\lib\socket.py in create_connection(address, timeout, source_address)

843 try:

--> 844 raise err

845 finally:

~\anaconda3\lib\socket.py in create_connection(address, timeout, source_address)

831 sock.bind(source_address)

--> 832 sock.connect(sa)

833 # Break explicitly a reference cycle

TimeoutError: [WinError 10060] A connection attempt failed because the connected party did not properly respond after a period of time, or established connection failed because connected host has failed to respond

During handling of the above exception, another exception occurred:

URLError Traceback (most recent call last)

~\AppData\Local\Temp/ipykernel_12220/2927704185.py in <module>

1 import seaborn as sn

----> 2 iris = sn.load_dataset('iris')

~\anaconda3\lib\site-packages\seaborn\utils.py in load_dataset(name, cache, data_home, **kws)

594 if name not in get_dataset_names():

595 raise ValueError(f"'{name}' is not one of the example datasets.")

--> 596 urlretrieve(url, cache_path)

597 full_path = cache_path

598 else:

~\anaconda3\lib\urllib\request.py in urlretrieve(url, filename, reporthook, data)

237 url_type, path = _splittype(url)

238

--> 239 with contextlib.closing(urlopen(url, data)) as fp:

240 headers = fp.info()

241

~\anaconda3\lib\urllib\request.py in urlopen(url, data, timeout, cafile, capath, cadefault, context)

212 else:

213 opener = _opener

--> 214 return opener.open(url, data, timeout)

215

216 def install_opener(opener):

~\anaconda3\lib\urllib\request.py in open(self, fullurl, data, timeout)

515

516 sys.audit('urllib.Request', req.full_url, req.data, req.headers, req.get_method())

--> 517 response = self._open(req, data)

518

519 # post-process response

~\anaconda3\lib\urllib\request.py in _open(self, req, data)

532

533 protocol = req.type

--> 534 result = self._call_chain(self.handle_open, protocol, protocol +

535 '_open', req)

536 if result:

~\anaconda3\lib\urllib\request.py in _call_chain(self, chain, kind, meth_name, *args)

492 for handler in handlers:

493 func = getattr(handler, meth_name)

--> 494 result = func(*args)

495 if result is not None:

496 return result

~\anaconda3\lib\urllib\request.py in https_open(self, req)

1387

1388 def https_open(self, req):

-> 1389 return self.do_open(http.client.HTTPSConnection, req,

1390 context=self._context, check_hostname=self._check_hostname)

1391

~\anaconda3\lib\urllib\request.py in do_open(self, http_class, req, **http_conn_args)

1347 encode_chunked=req.has_header('Transfer-encoding'))

1348 except OSError as err: # timeout error

-> 1349 raise URLError(err)

1350 r = h.getresponse()

1351 except:

URLError: <urlopen error [WinError 10060] A connection attempt failed because the connected party did not properly respond after a period of time, or established connection failed because connected host has failed to respond>

|

CLOSED

| 2023-02-16T14:01:58

| 2023-02-16T14:44:36

| 2023-02-16T14:44:36

|

https://github.com/huggingface/datasets/issues/5538

|

reemaranibarik

| 1

|

[] |

5,537

|

Increase speed of data files resolution

|

Certain datasets like `bigcode/the-stack-dedup` have so many files that loading them takes forever right from the data files resolution step.

`datasets` uses file patterns to check the structure of the repository but it takes too much time to iterate over and over again on all the data files.

This comes from `resolve_patterns_in_dataset_repository` which calls `_resolve_single_pattern_in_dataset_repository`, which iterates on all the files at

```python

glob_iter = [PurePath(filepath) for filepath in fs.glob(PurePath(pattern).as_posix()) if fs.isfile(filepath)]

```

but calling `glob` on such a dataset is too expensive. Indeed it calls `ls()` in `hffilesystem.py` too many times.

Maybe `glob` can be more optimized in `hffilesystem.py`, or the data files resolution can directly be implemented in the filesystem by checking its `dir_cache` ?

|

CLOSED

| 2023-02-16T12:11:45

| 2023-12-15T13:12:31

| 2023-12-15T13:12:31

|

https://github.com/huggingface/datasets/issues/5537

|

lhoestq

| 5

|

[

"enhancement",

"good second issue"

] |

5,536

|

Failure to hash function when using .map()

|

### Describe the bug

_Parameter 'function'=<function process at 0x7f1ec4388af0> of the transform datasets.arrow_dataset.Dataset.\_map_single couldn't be hashed properly, a random hash was used instead. Make sure your transforms and parameters are serializable with pickle or dill for the dataset fingerprinting and caching to work. If you reuse this transform, the caching mechanism will consider it to be different from the previous calls and recompute everything. This warning is only showed once. Subsequent hashing failures won't be showed._

This issue with `.map()` happens for me consistently, as also described in closed issue #4506

Dataset indices can be individually serialized using dill and pickle without any errors. I'm using tiktoken to encode in the function passed to map(). Similarly, indices can be individually encoded without error.

### Steps to reproduce the bug

```py

from datasets import load_dataset

import tiktoken

dataset = load_dataset("stas/openwebtext-10k")

enc = tiktoken.get_encoding("gpt2")

tokenized = dataset.map(

process,

remove_columns=['text'],

desc="tokenizing the OWT splits",

)

def process(example):

ids = enc.encode(example['text'])

ids.append(enc.eot_token)

out = {'ids': ids, 'len': len(ids)}

return out

```

### Expected behavior

Should encode simple text objects.

### Environment info

Python versions tried: both 3.8 and 3.10.10

`PYTHONUTF8=1` as env variable

Datasets tried:

- stas/openwebtext-10k

- rotten_tomatoes

- local text file

OS: Ubuntu Linux 20.04

Package versions:

- torch 1.13.1

- dill 0.3.4 (if using 0.3.6 - same issue)

- datasets 2.9.0

- tiktoken 0.2.0

|

CLOSED

| 2023-02-16T03:12:07

| 2023-09-08T21:06:01

| 2023-02-16T14:56:41

|

https://github.com/huggingface/datasets/issues/5536

|

venzen

| 14

|

[] |

5,534

|

map() breaks at certain dataset size when using Array3D

|

### Describe the bug

`map()` magically breaks when using a `Array3D` feature and mapping it. I created a very simple dummy dataset (see below). When filtering it down to 95 elements I can apply map, but it breaks when filtering it down to just 96 entries with the following exception:

```

Traceback (most recent call last):

File "/home/arbi01/miniconda3/envs/tmp9/lib/python3.9/site-packages/datasets/arrow_dataset.py", line 3255, in _map_single

writer.finalize() # close_stream=bool(buf_writer is None)) # We only close if we are writing in a file

File "/home/arbi01/miniconda3/envs/tmp9/lib/python3.9/site-packages/datasets/arrow_writer.py", line 581, in finalize

self.write_examples_on_file()

File "/home/arbi01/miniconda3/envs/tmp9/lib/python3.9/site-packages/datasets/arrow_writer.py", line 440, in write_examples_on_file

batch_examples[col] = array_concat(arrays)

File "/home/arbi01/miniconda3/envs/tmp9/lib/python3.9/site-packages/datasets/table.py", line 1931, in array_concat

return _concat_arrays(arrays)

File "/home/arbi01/miniconda3/envs/tmp9/lib/python3.9/site-packages/datasets/table.py", line 1901, in _concat_arrays

return array_type.wrap_array(_concat_arrays([array.storage for array in arrays]))

File "/home/arbi01/miniconda3/envs/tmp9/lib/python3.9/site-packages/datasets/table.py", line 1922, in _concat_arrays

_concat_arrays([array.values for array in arrays]),

File "/home/arbi01/miniconda3/envs/tmp9/lib/python3.9/site-packages/datasets/table.py", line 1922, in _concat_arrays

_concat_arrays([array.values for array in arrays]),

File "/home/arbi01/miniconda3/envs/tmp9/lib/python3.9/site-packages/datasets/table.py", line 1920, in _concat_arrays

return pa.ListArray.from_arrays(

File "pyarrow/array.pxi", line 1997, in pyarrow.lib.ListArray.from_arrays

File "pyarrow/array.pxi", line 1527, in pyarrow.lib.Array.validate

File "pyarrow/error.pxi", line 100, in pyarrow.lib.check_status

pyarrow.lib.ArrowInvalid: Negative offsets in list array

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/home/arbi01/miniconda3/envs/tmp9/lib/python3.9/site-packages/datasets/arrow_dataset.py", line 2815, in map

return self._map_single(

File "/home/arbi01/miniconda3/envs/tmp9/lib/python3.9/site-packages/datasets/arrow_dataset.py", line 546, in wrapper

out: Union["Dataset", "DatasetDict"] = func(self, *args, **kwargs)

File "/home/arbi01/miniconda3/envs/tmp9/lib/python3.9/site-packages/datasets/arrow_dataset.py", line 513, in wrapper

out: Union["Dataset", "DatasetDict"] = func(self, *args, **kwargs)

File "/home/arbi01/miniconda3/envs/tmp9/lib/python3.9/site-packages/datasets/fingerprint.py", line 480, in wrapper

out = func(self, *args, **kwargs)

File "/home/arbi01/miniconda3/envs/tmp9/lib/python3.9/site-packages/datasets/arrow_dataset.py", line 3259, in _map_single

writer.finalize()

File "/home/arbi01/miniconda3/envs/tmp9/lib/python3.9/site-packages/datasets/arrow_writer.py", line 581, in finalize

self.write_examples_on_file()

File "/home/arbi01/miniconda3/envs/tmp9/lib/python3.9/site-packages/datasets/arrow_writer.py", line 440, in write_examples_on_file

batch_examples[col] = array_concat(arrays)

File "/home/arbi01/miniconda3/envs/tmp9/lib/python3.9/site-packages/datasets/table.py", line 1931, in array_concat

return _concat_arrays(arrays)

File "/home/arbi01/miniconda3/envs/tmp9/lib/python3.9/site-packages/datasets/table.py", line 1901, in _concat_arrays

return array_type.wrap_array(_concat_arrays([array.storage for array in arrays]))

File "/home/arbi01/miniconda3/envs/tmp9/lib/python3.9/site-packages/datasets/table.py", line 1922, in _concat_arrays

_concat_arrays([array.values for array in arrays]),

File "/home/arbi01/miniconda3/envs/tmp9/lib/python3.9/site-packages/datasets/table.py", line 1922, in _concat_arrays

_concat_arrays([array.values for array in arrays]),

File "/home/arbi01/miniconda3/envs/tmp9/lib/python3.9/site-packages/datasets/table.py", line 1920, in _concat_arrays

return pa.ListArray.from_arrays(

File "pyarrow/array.pxi", line 1997, in pyarrow.lib.ListArray.from_arrays

File "pyarrow/array.pxi", line 1527, in pyarrow.lib.Array.validate

File "pyarrow/error.pxi", line 100, in pyarrow.lib.check_status

pyarrow.lib.ArrowInvalid: Negative offsets in list array

```

### Steps to reproduce the bug

1. put following dataset loading script into: debug/debug.py

```python

import datasets

import numpy as np

class DEBUG(datasets.GeneratorBasedBuilder):

"""DEBUG dataset."""

def _info(self):

return datasets.DatasetInfo(

features=datasets.Features(

{

"id": datasets.Value("uint8"),

"img_data": datasets.Array3D(shape=(3, 224, 224), dtype="uint8"),

},

),

supervised_keys=None,

)

def _split_generators(self, dl_manager):

return [datasets.SplitGenerator(name=datasets.Split.TRAIN)]

def _generate_examples(self):

for i in range(149):

image_np = np.zeros(shape=(3, 224, 224), dtype=np.int8).tolist()

yield f"id_{i}", {"id": i, "img_data": image_np}

```

2. try the following code:

```python

import datasets

def add_dummy_col(ex):

ex["dummy"] = "test"

return ex

ds = datasets.load_dataset(path="debug", split="train")

# works

ds_filtered_works = ds.filter(lambda example: example["id"] < 95)

print(f"filtered result size: {len(ds_filtered_works)}")

# output:

# filtered result size: 95

ds_mapped_works = ds_filtered_works.map(add_dummy_col)

# fails

ds_filtered_error = ds.filter(lambda example: example["id"] < 96)

print(f"filtered result size: {len(ds_filtered_error)}")

# output:

# filtered result size: 96

ds_mapped_error = ds_filtered_error.map(add_dummy_col)

```

### Expected behavior

The example code does not fail.

### Environment info

Python 3.9.16 (main, Jan 11 2023, 16:05:54); [GCC 11.2.0] :: Anaconda, Inc. on linux

datasets 2.9.0

|

OPEN

| 2023-02-15T16:34:25

| 2023-03-03T16:31:33

| null |

https://github.com/huggingface/datasets/issues/5534

|

ArneBinder

| 2

|

[] |

5,532

|

train_test_split in arrow_dataset does not ensure to keep single classes in test set

|

### Describe the bug

When I have a dataset with very few (e.g. 1) examples per class and I call the train_test_split function on it, sometimes the single class will be in the test set. thus will never be considered for training.

### Steps to reproduce the bug

```

import numpy as np

from datasets import Dataset

data = [

{'label': 0, 'text': "example1"},

{'label': 1, 'text': "example2"},

{'label': 1, 'text': "example3"},

{'label': 1, 'text': "example4"},

{'label': 0, 'text': "example5"},

{'label': 1, 'text': "example6"},

{'label': 2, 'text': "example7"},

{'label': 2, 'text': "example8"}

]

for _ in range(10):

data_set = Dataset.from_list(data)

data_set = data_set.train_test_split(test_size=0.5)

data_set["train"]

unique_labels_train = np.unique(data_set["train"][:]["label"])

unique_labels_test = np.unique(data_set["test"][:]["label"])

assert len(unique_labels_train) >= len(unique_labels_test)

```

### Expected behavior

I expect to have every available class at least once in my training set.

### Environment info

- `datasets` version: 2.9.0

- Platform: Linux-5.15.65+-x86_64-with-debian-bullseye-sid

- Python version: 3.7.12

- PyArrow version: 11.0.0

- Pandas version: 1.3.5

|

CLOSED

| 2023-02-14T16:52:29

| 2023-02-15T16:09:19

| 2023-02-15T16:09:19

|

https://github.com/huggingface/datasets/issues/5532

|

Ulipenitz

| 1

|

[] |

5,531

|

Invalid Arrow data from JSONL

|

This code fails:

```python

from datasets import Dataset

ds = Dataset.from_json(path_to_file)

ds.data.validate()

```

raises

```python

ArrowInvalid: Column 2: In chunk 1: Invalid: Struct child array #3 invalid: Invalid: Length spanned by list offsets (4064) larger than values array (length 4063)

```

This causes many issues for @TevenLeScao:

- `map` fails because it fails to rewrite invalid arrow arrays

```python

~/Desktop/hf/datasets/src/datasets/arrow_writer.py in write_examples_on_file(self)

438 if all(isinstance(row[0][col], (pa.Array, pa.ChunkedArray)) for row in self.current_examples):

439 arrays = [row[0][col] for row in self.current_examples]

--> 440 batch_examples[col] = array_concat(arrays)

441 else:

442 batch_examples[col] = [

~/Desktop/hf/datasets/src/datasets/table.py in array_concat(arrays)

1885

1886 if not _is_extension_type(array_type):

-> 1887 return pa.concat_arrays(arrays)

1888

1889 def _offsets_concat(offsets):

~/.virtualenvs/hf-datasets/lib/python3.7/site-packages/pyarrow/array.pxi in pyarrow.lib.concat_arrays()

~/.virtualenvs/hf-datasets/lib/python3.7/site-packages/pyarrow/error.pxi in pyarrow.lib.pyarrow_internal_check_status()

~/.virtualenvs/hf-datasets/lib/python3.7/site-packages/pyarrow/error.pxi in pyarrow.lib.check_status()

ArrowIndexError: array slice would exceed array length

```

- `to_dict()` **segfaults** ⚠️

```python

/Users/runner/work/crossbow/crossbow/arrow/cpp/src/arrow/array/data.cc:99: Check failed: (off) <= (length) Slice offset greater

than array length

```

To reproduce: unzip the archive and run the above code using `sanity_oscar_en.jsonl`

[sanity_oscar_en.jsonl.zip](https://github.com/huggingface/datasets/files/10734124/sanity_oscar_en.jsonl.zip)

PS: reading using pandas and converting to Arrow works though (note that the dataset lives in RAM in this case):

```python

ds = Dataset.from_pandas(pd.read_json(path_to_file, lines=True))

ds.data.validate()

```

|

OPEN

| 2023-02-14T15:39:49

| 2023-02-14T15:46:09

| null |

https://github.com/huggingface/datasets/issues/5531

|

lhoestq

| 0

|

[

"bug"

] |

5,525

|

TypeError: Couldn't cast array of type string to null

|

### Describe the bug

Processing a dataset I alredy uploaded to the Hub (https://huggingface.co/datasets/tj-solergibert/Europarl-ST) I found that for some splits and some languages (test split, source_lang = "nl") after applying a map function I get the mentioned error.

I alredy tried reseting the shorter strings (reset_cortas function). It only happends with NL, PL, RO and PT. It does not make sense since when processing the other languages I also use the corpus of those that fail and it does not cause any errors.

I suspect that the error may be in this direction:

We use cast_array_to_feature to support casting to custom types like Audio and Image # Also, when trying type "string", we don't want to convert integers or floats to "string". # We only do it if trying_type is False - since this is what the user asks for.

### Steps to reproduce the bug

Here I link a colab notebook to reproduce the error:

https://colab.research.google.com/drive/1JCrS7FlGfu_kFqChMrwKZ_bpabnIMqbP?authuser=1#scrollTo=FBAvlhMxIzpA

### Expected behavior

Data processing does not fail. A correct example can be seen here: https://huggingface.co/datasets/tj-solergibert/Europarl-ST-processed-mt-en

### Environment info

- `datasets` version: 2.9.0

- Platform: Linux-5.10.147+-x86_64-with-glibc2.29

- Python version: 3.8.10

- PyArrow version: 9.0.0

- Pandas version: 1.3.5

|

CLOSED

| 2023-02-10T21:12:36

| 2023-02-14T17:41:08

| 2023-02-14T09:35:49

|

https://github.com/huggingface/datasets/issues/5525

|

TJ-Solergibert

| 6

|

[] |

5,523

|

Checking that split name is correct happens only after the data is downloaded

|

### Describe the bug

Verification of split names (=indexing data by split) happens after downloading the data. So when the split name is incorrect, users learn about that only after the data is fully downloaded, for large datasets it might take a lot of time.

### Steps to reproduce the bug

Load any dataset with random split name, for example:

```python

from datasets import load_dataset

load_dataset("mozilla-foundation/common_voice_11_0", "en", split="blabla")

```

and the download will start smoothly, despite there is no split named "blabla".

### Expected behavior

Raise error when split name is incorrect.

### Environment info

`datasets==2.9.1.dev0`

|

OPEN

| 2023-02-10T19:13:03

| 2023-02-10T19:14:50

| null |

https://github.com/huggingface/datasets/issues/5523

|

polinaeterna

| 0

|

[

"bug"

] |

5,520

|

ClassLabel.cast_storage raises TypeError when called on an empty IntegerArray

|

### Describe the bug

`ClassLabel.cast_storage` raises `TypeError` when called on an empty `IntegerArray`.

### Steps to reproduce the bug

Minimal steps:

```python

import pyarrow as pa

from datasets import ClassLabel

ClassLabel(names=['foo', 'bar']).cast_storage(pa.array([], pa.int64()))

```

In practice, this bug arises in situations like the one below:

```python

from datasets import ClassLabel, Dataset, Features, Sequence

dataset = Dataset.from_dict({'labels': [[], []]}, features=Features({'labels': Sequence(ClassLabel(names=['foo', 'bar']))}))

# this raises TypeError

dataset.map(batched=True, batch_size=1)

```

### Expected behavior

`ClassLabel.cast_storage` should return an empty Int64Array.

### Environment info

- `datasets` version: 2.9.1.dev0

- Platform: Linux-4.15.0-1032-aws-x86_64-with-glibc2.27

- Python version: 3.10.6

- PyArrow version: 11.0.0

- Pandas version: 1.5.3

|

CLOSED

| 2023-02-09T18:46:52

| 2023-02-12T11:17:18

| 2023-02-12T11:17:18

|

https://github.com/huggingface/datasets/issues/5520

|

marioga

| 0

|

[] |

5,517

|

`with_format("numpy")` silently downcasts float64 to float32 features

|

### Describe the bug

When I create a dataset with a `float64` feature, then apply numpy formatting the returned numpy arrays are silently downcasted to `float32`.

### Steps to reproduce the bug

```python

import datasets

dataset = datasets.Dataset.from_dict({'a': [1.0, 2.0, 3.0]}).with_format("numpy")

print("feature dtype:", dataset.features['a'].dtype)

print("array dtype:", dataset['a'].dtype)

```

output:

```

feature dtype: float64

array dtype: float32

```

### Expected behavior

```

feature dtype: float64

array dtype: float64

```

### Environment info

- `datasets` version: 2.8.0

- Platform: Linux-5.4.0-135-generic-x86_64-with-glibc2.29

- Python version: 3.8.10

- PyArrow version: 10.0.1

- Pandas version: 1.4.4

### Suggested Fix

Changing [the `_tensorize` function of the numpy formatter](https://github.com/huggingface/datasets/blob/b065547654efa0ec633cf373ac1512884c68b2e1/src/datasets/formatting/np_formatter.py#L32) to

```python

def _tensorize(self, value):

if isinstance(value, (str, bytes, type(None))):

return value

elif isinstance(value, (np.character, np.ndarray)) and np.issubdtype(value.dtype, np.character):

return value

elif isinstance(value, np.number):

return value

return np.asarray(value, **self.np_array_kwargs)

```

fixes this particular issue for me. Not sure if this would break other tests. This should also avoid unnecessary copying of the array.

|

OPEN

| 2023-02-09T14:18:00

| 2024-01-18T08:42:17

| null |

https://github.com/huggingface/datasets/issues/5517

|

ernestum

| 13

|

[] |

5,514

|

Improve inconsistency of `Dataset.map` interface for `load_from_cache_file`

|

### Feature request

1. Replace the `load_from_cache_file` default value to `True`.

2. Remove or alter checks from `is_caching_enabled` logic.

### Motivation

I stumbled over an inconsistency in the `Dataset.map` interface. The documentation (and source) states for the parameter `load_from_cache_file`:

```

load_from_cache_file (`bool`, defaults to `True` if caching is enabled):

If a cache file storing the current computation from `function`

can be identified, use it instead of recomputing.

```

1. `load_from_cache_file` default value is `None`, while being annotated as `bool`

2. It is inconsistent with other method signatures like `filter`, that have the default value `True`

3. The logic is inconsistent, as the `map` method checks if caching is enabled through `is_caching_enabled`. This logic is not used for other similar methods.

### Your contribution

I am not fully aware of the logic behind caching checks. If this is just a inconsistency that historically grew, I would suggest to remove the `is_caching_enabled` logic as the "default" logic. Maybe someone can give insights, if environment variables have a higher priority than local variables or vice versa.

If this is clarified, I could adjust the source according to the "Feature request" section of this issue.

|

CLOSED

| 2023-02-08T16:40:44

| 2023-02-14T14:26:44

| 2023-02-14T14:26:44

|

https://github.com/huggingface/datasets/issues/5514

|

HallerPatrick

| 4

|

[

"enhancement"

] |

5,513

|

Some functions use a param named `type` shouldn't that be avoided since it's a Python reserved name?

|

Hi @mariosasko, @lhoestq, or whoever reads this! :)

After going through `ArrowDataset.set_format` I found out that the `type` param is actually named `type` which is a Python reserved name as you may already know, shouldn't that be renamed to `format_type` before the 3.0.0 is released?

Just wanted to get your input, and if applicable, tackle this issue myself! Thanks 🤗

|

CLOSED

| 2023-02-08T15:13:46

| 2023-07-24T16:02:18

| 2023-07-24T14:27:59

|

https://github.com/huggingface/datasets/issues/5513

|

alvarobartt

| 4

|

[] |

5,511

|

Creating a dummy dataset from a bigger one

|

### Describe the bug

I often want to create a dummy dataset from a bigger dataset for fast iteration when training. However, I'm having a hard time doing this especially when trying to upload the dataset to the Hub.

### Steps to reproduce the bug

```python

from datasets import load_dataset

dataset = load_dataset("lambdalabs/pokemon-blip-captions")

dataset["train"] = dataset["train"].select(range(20))

dataset.push_to_hub("patrickvonplaten/dummy_image_data")

```

gives:

```

~/python_bin/datasets/arrow_dataset.py in _push_parquet_shards_to_hub(self, repo_id, split, private, token, branch, max_shard_size, embed_external_files)

4003 base_wait_time=2.0,

4004 max_retries=5,

-> 4005 max_wait_time=20.0,

4006 )

4007 return repo_id, split, uploaded_size, dataset_nbytes

~/python_bin/datasets/utils/file_utils.py in _retry(func, func_args, func_kwargs, exceptions, status_codes, max_retries, base_wait_time, max_wait_time)

328 while True:

329 try:

--> 330 return func(*func_args, **func_kwargs)

331 except exceptions as err:

332 if retry >= max_retries or (status_codes and err.response.status_code not in status_codes):

~/hf/lib/python3.7/site-packages/huggingface_hub/utils/_validators.py in _inner_fn(*args, **kwargs)

122 )

123

--> 124 return fn(*args, **kwargs)

125

126 return _inner_fn # type: ignore

TypeError: upload_file() got an unexpected keyword argument 'identical_ok'

In [2]:

```

### Expected behavior

I would have expected this to work. It's for me the most intuitive way of creating a dummy dataset.

### Environment info

```

- `datasets` version: 2.1.1.dev0

- Platform: Linux-4.19.0-22-cloud-amd64-x86_64-with-debian-10.13

- Python version: 3.7.3

- PyArrow version: 11.0.0

- Pandas version: 1.3.5

```

|

CLOSED

| 2023-02-08T10:18:41

| 2023-12-28T18:21:01

| 2023-02-08T10:35:48

|

https://github.com/huggingface/datasets/issues/5511

|

patrickvonplaten

| 8

|

[] |

5,508

|

Saving a dataset after setting format to torch doesn't work, but only if filtering

|

### Describe the bug

Saving a dataset after setting format to torch doesn't work, but only if filtering

### Steps to reproduce the bug

```

a = Dataset.from_dict({"b": [1, 2]})

a.set_format('torch')

a.save_to_disk("test_save") # saves successfully

a.filter(None).save_to_disk("test_save_filter") # does not

>> [...] TypeError: Provided `function` which is applied to all elements of table returns a `dict` of types [<class 'torch.Tensor'>]. When using `batched=True`, make sure provided `function` returns a `dict` of types like `(<class 'list'>, <class 'numpy.ndarray'>)`.

# note: skipping the format change to torch lets this work.

### Expected behavior

Saving to work

### Environment info

- `datasets` version: 2.4.0

- Platform: Linux-6.1.9-arch1-1-x86_64-with-glibc2.36

- Python version: 3.10.9

- PyArrow version: 9.0.0

- Pandas version: 1.4.4

|

CLOSED

| 2023-02-06T21:08:58

| 2023-02-09T14:55:26

| 2023-02-09T14:55:26

|

https://github.com/huggingface/datasets/issues/5508

|

joebhakim

| 2

|

[] |

5,507

|

Optimise behaviour in respect to indices mapping

|

_Originally [posted](https://huggingface.slack.com/archives/C02V51Q3800/p1675443873878489?thread_ts=1675418893.373479&cid=C02V51Q3800) on Slack_

Considering all this, perhaps for Datasets 3.0, we can do the following:

* [ ] have `continuous=True` by default in `.shard` (requested in the survey and makes more sense for us since it doesn't create an indices mapping)

* [x] allow calling `save_to_disk` on "unflattened" datasets

* [ ] remove "hidden" expensive calls in `save_to_disk`, `unique`, `concatenate_datasets`, etc. For instance, instead of silently calling `flatten_indices` where it's needed, it's probably better to be explicit (considering how expensive these ops can be) and raise an error instead

|

OPEN

| 2023-02-06T14:25:55

| 2023-02-28T18:19:18

| null |

https://github.com/huggingface/datasets/issues/5507

|

mariosasko

| 0

|

[

"enhancement"

] |

5,506

|

IterableDataset and Dataset return different batch sizes when using Trainer with multiple GPUs

|

### Describe the bug

I am training a Roberta model using 2 GPUs and the `Trainer` API with a batch size of 256.

Initially I used a standard `Dataset`, but had issues with slow data loading. After reading [this issue](https://github.com/huggingface/datasets/issues/2252), I swapped to loading my dataset as contiguous shards and passing those to an `IterableDataset`. I observed an unexpected drop in GPU memory utilization, and found the batch size returned from the model had been cut in half.

When using `Trainer` with 2 GPUs and a batch size of 256, `Dataset` returns a batch of size 512 (256 per GPU), while `IterableDataset` returns a batch size of 256 (256 total). My guess is `IterableDataset` isn't accounting for multiple cards.

### Steps to reproduce the bug

```python

import datasets

from datasets import IterableDataset

from transformers import RobertaConfig

from transformers import RobertaTokenizerFast

from transformers import RobertaForMaskedLM

from transformers import DataCollatorForLanguageModeling

from transformers import Trainer, TrainingArguments

use_iterable_dataset = True

def gen_from_shards(shards):

for shard in shards:

for example in shard:

yield example

dataset = datasets.load_from_disk('my_dataset.hf')

if use_iterable_dataset:

n_shards = 100

shards = [dataset.shard(num_shards=n_shards, index=i) for i in range(n_shards)]

dataset = IterableDataset.from_generator(gen_from_shards, gen_kwargs={"shards": shards})

tokenizer = RobertaTokenizerFast.from_pretrained("./my_tokenizer", max_len=160, use_fast=True)

config = RobertaConfig(

vocab_size=8248,

max_position_embeddings=256,

num_attention_heads=8,

num_hidden_layers=6,

type_vocab_size=1)

model = RobertaForMaskedLM(config=config)

data_collator = DataCollatorForLanguageModeling(tokenizer=tokenizer, mlm=True, mlm_probability=0.15)

training_args = TrainingArguments(

per_device_train_batch_size=256

# other args removed for brevity

)

trainer = Trainer(

model=model,

args=training_args,

data_collator=data_collator,

train_dataset=dataset,

)

trainer.train()

```

### Expected behavior

Expected `Dataset` and `IterableDataset` to have the same batch size behavior. If the current behavior is intentional, the batch size printout at the start of training should be updated. Currently, both dataset classes result in `Trainer` printing the same total batch size, even though the batch size sent to the GPUs are different.

### Environment info

datasets 2.7.1

transformers 4.25.1

|

CLOSED

| 2023-02-06T03:26:03

| 2023-02-08T18:30:08

| 2023-02-08T18:30:07

|

https://github.com/huggingface/datasets/issues/5506

|

kheyer

| 4

|

[] |

5,505

|

PyTorch BatchSampler still loads from Dataset one-by-one

|

### Describe the bug

In [the docs here](https://huggingface.co/docs/datasets/use_with_pytorch#use-a-batchsampler), it mentions the issue of the Dataset being read one-by-one, then states that using a BatchSampler resolves the issue.

I'm not sure if this is a mistake in the docs or the code, but it seems that the only way for a Dataset to be passed a list of indexes by PyTorch (instead of one index at a time) is to define a `__getitems__` method (note the plural) on the Dataset object, and since the HF Dataset doesn't have this, PyTorch executes [this line of code](https://github.com/pytorch/pytorch/blob/master/torch/utils/data/_utils/fetch.py#L58), reverting to fetching one-by-one.

### Steps to reproduce the bug

You can put a breakpoint in `Dataset.__getitem__()` or just print the args from there and see that it's called multiple times for a single `next(iter(dataloader))`, even when using the code from the docs:

```py

from torch.utils.data.sampler import BatchSampler, RandomSampler

batch_sampler = BatchSampler(RandomSampler(ds), batch_size=32, drop_last=False)

dataloader = DataLoader(ds, batch_sampler=batch_sampler)

```

### Expected behavior

The expected behaviour would be for it to fetch batches from the dataset, rather than one-by-one.

To demonstrate that there is room for improvement: once I have a HF dataset `ds`, if I just add this line:

```py

ds.__getitems__ = ds.__getitem__

```

...then the time taken to loop over the dataset improves considerably (for wikitext-103, from one minute to 13 seconds with batch size 32). Probably not a big deal in the grand scheme of things, but seems like an easy win.

### Environment info

- `datasets` version: 2.9.0

- Platform: Linux-5.10.102.1-microsoft-standard-WSL2-x86_64-with-glibc2.31

- Python version: 3.10.8

- PyArrow version: 10.0.1

- Pandas version: 1.5.3

|

CLOSED

| 2023-02-06T01:14:55

| 2023-02-19T18:27:30

| 2023-02-19T18:27:30

|

https://github.com/huggingface/datasets/issues/5505

|

davidgilbertson

| 2

|

[] |

5,500

|

WMT19 custom download checksum error

|

### Describe the bug

I use the following scripts to download data from WMT19:

```python

import datasets

from datasets import inspect_dataset, load_dataset_builder

from wmt19.wmt_utils import _TRAIN_SUBSETS,_DEV_SUBSETS

## this is a must due to: https://discuss.huggingface.co/t/load-dataset-hangs-with-local-files/28034/3

if __name__ == '__main__':

dev_subsets,train_subsets = [],[]

for subset in _TRAIN_SUBSETS:

if subset.target=='en' and 'de' in subset.sources:

train_subsets.append(subset.name)

for subset in _DEV_SUBSETS:

if subset.target=='en' and 'de' in subset.sources:

dev_subsets.append(subset.name)

inspect_dataset("wmt19", "./wmt19")

builder = load_dataset_builder(

"./wmt19/wmt_utils.py",

language_pair=("de", "en"),

subsets={

datasets.Split.TRAIN: train_subsets,

datasets.Split.VALIDATION: dev_subsets,

},

)

builder.download_and_prepare()

ds = builder.as_dataset()

ds.to_json("../data/wmt19/ende/data.json")

```

And I got the following error:

```

Traceback (most recent call last): | 0/2 [00:00<?, ?obj/s]

File "draft.py", line 26, in <module>

builder.download_and_prepare() | 0/1 [00:00<?, ?obj/s]

File "/Users/hannibal046/anaconda3/lib/python3.8/site-packages/datasets/builder.py", line 605, in download_and_prepare

self._download_and_prepare(%| | 0/1 [00:00<?, ?obj/s]

File "/Users/hannibal046/anaconda3/lib/python3.8/site-packages/datasets/builder.py", line 1104, in _download_and_prepare

super()._download_and_prepare(dl_manager, verify_infos, check_duplicate_keys=verify_infos) | 0/1 [00:00<?, ?obj/s]

File "/Users/hannibal046/anaconda3/lib/python3.8/site-packages/datasets/builder.py", line 676, in _download_and_prepare

verify_checksums(s #13: 0%| | 0/1 [00:00<?, ?obj/s]

File "/Users/hannibal046/anaconda3/lib/python3.8/site-packages/datasets/utils/info_utils.py", line 35, in verify_checksums

raise UnexpectedDownloadedFile(str(set(recorded_checksums) - set(expected_checksums))) | 0/1 [00:00<?, ?obj/s]

datasets.utils.info_utils.UnexpectedDownloadedFile: {'https://s3.amazonaws.com/web-language-models/paracrawl/release1/paracrawl-release1.en-de.zipporah0-dedup-clean.tgz', 'https://huggingface.co/datasets/wmt/wmt13/resolve/main-zip/training-parallel-europarl-v7.zip', 'https://huggingface.co/datasets/wmt/wmt18/resolve/main-zip/translation-task/rapid2016.zip', 'https://huggingface.co/datasets/wmt/wmt18/resolve/main-zip/translation-task/training-parallel-nc-v13.zip', 'https://huggingface.co/datasets/wmt/wmt17/resolve/main-zip/translation-task/training-parallel-nc-v12.zip', 'https://huggingface.co/datasets/wmt/wmt14/resolve/main-zip/training-parallel-nc-v9.zip', 'https://huggingface.co/datasets/wmt/wmt15/resolve/main-zip/training-parallel-nc-v10.zip', 'https://huggingface.co/datasets/wmt/wmt16/resolve/main-zip/translation-task/training-parallel-nc-v11.zip'}

```

### Steps to reproduce the bug

see above

### Expected behavior

download data successfully

### Environment info

datasets==2.1.0

python==3.8

|

CLOSED

| 2023-02-03T05:45:37

| 2023-02-03T05:52:56

| 2023-02-03T05:52:56

|

https://github.com/huggingface/datasets/issues/5500

|

Hannibal046

| 1

|

[] |

5,499

|

`load_dataset` has ~4 seconds of overhead for cached data

|

### Feature request

When loading a dataset that has been cached locally, the `load_dataset` function takes a lot longer than it should take to fetch the dataset from disk (or memory).

This is particularly noticeable for smaller datasets. For example, wikitext-2, comparing `load_data` (once cached) and `load_from_disk`, the `load_dataset` method takes 40 times longer.

⏱ 4.84s ⮜ load_dataset

⏱ 119ms ⮜ load_from_disk

### Motivation

I assume this is doing something like checking for a newer version.

If so, that's an age old problem: do you make the user wait _every single time they load from cache_ or do you do something like load from cache always, _then_ check for a newer version and alert if they have stale data. The decision usually revolves around what percentage of the time the data will have been updated, and how dangerous old data is.

For most datasets it's extremely unlikely that there will be a newer version on any given run, so 99% of the time this is just wasted time.

Maybe you don't want to make that decision for all users, but at least having the _option_ to not wait for checks would be an improvement.

### Your contribution

.

|

OPEN

| 2023-02-02T23:34:50

| 2023-02-07T19:35:11

| null |

https://github.com/huggingface/datasets/issues/5499

|

davidgilbertson

| 2

|

[

"enhancement"

] |

5,498

|

TypeError: 'bool' object is not iterable when filtering a datasets.arrow_dataset.Dataset

|

### Describe the bug

Hi,

Thanks for the amazing work on the library!

**Describe the bug**

I think I might have noticed a small bug in the filter method.

Having loaded a dataset using `load_dataset`, when I try to filter out empty entries with `batched=True`, I get a TypeError.

### Steps to reproduce the bug

```

train_dataset = train_dataset.filter(

function=lambda example: example["image"] is not None,

batched=True,

batch_size=10)

```

Error message:

```

File .../lib/python3.9/site-packages/datasets/fingerprint.py:480, in fingerprint_transform.<locals>._fingerprint.<locals>.wrapper(*args, **kwargs)

476 validate_fingerprint(kwargs[fingerprint_name])

478 # Call actual function

--> 480 out = func(self, *args, **kwargs)

...

-> 5666 indices_array = [i for i, to_keep in zip(indices, mask) if to_keep]

5667 if indices_mapping is not None:

5668 indices_array = pa.array(indices_array, type=pa.uint64())

TypeError: 'bool' object is not iterable

```

**Removing batched=True allows to bypass the issue.**

### Expected behavior

According to the doc, "[batch_size corresponds to the] number of examples per batch provided to function if batched = True", so we shouldn't need to remove the batchd=True arg?

source: https://huggingface.co/docs/datasets/v2.9.0/en/package_reference/main_classes#datasets.Dataset.filter

### Environment info

- `datasets` version: 2.9.0

- Platform: Linux-5.4.0-122-generic-x86_64-with-glibc2.31

- Python version: 3.9.10

- PyArrow version: 10.0.1

- Pandas version: 1.5.3

|

CLOSED

| 2023-02-02T14:46:49

| 2023-10-08T06:12:47

| 2023-02-04T17:19:36

|

https://github.com/huggingface/datasets/issues/5498

|

vmuel

| 3

|

[] |

5,496

|

Add a `reduce` method

|

### Feature request

Right now the `Dataset` class implements `map()` and `filter()`, but leaves out the third functional idiom popular among Python users: `reduce`.

### Motivation

A `reduce` method is often useful when calculating dataset statistics, for example, the occurrence of a particular n-gram or the average line length of a code dataset.

### Your contribution

I haven't contributed to `datasets` before, but I don't expect this will be too difficult, since the implementation will closely follow that of `map` and `filter`. I could have a crack over the weekend.

|

CLOSED

| 2023-02-02T04:30:22

| 2024-11-12T05:58:14

| 2023-07-21T14:24:32

|

https://github.com/huggingface/datasets/issues/5496

|

zhangir-azerbayev

| 4

|

[

"enhancement"

] |

5,495

|

to_tf_dataset fails with datetime UTC columns even if not included in columns argument

|

### Describe the bug

There appears to be some eager behavior in `to_tf_dataset` that runs against every column in a dataset even if they aren't included in the columns argument. This is problematic with datetime UTC columns due to them not working with zero copy. If I don't have UTC information in my datetime column, then everything works as expected.

### Steps to reproduce the bug

```python

import numpy as np

import pandas as pd

from datasets import Dataset

df = pd.DataFrame(np.random.rand(2, 1), columns=["x"])

# df["dt"] = pd.to_datetime(["2023-01-01", "2023-01-01"]) # works fine

df["dt"] = pd.to_datetime(["2023-01-01 00:00:00.00000+00:00", "2023-01-01 00:00:00.00000+00:00"])

df.to_parquet("test.pq")

ds = Dataset.from_parquet("test.pq")

tf_ds = ds.to_tf_dataset(columns=["x"], batch_size=2, shuffle=True)

```

```

ArrowInvalid Traceback (most recent call last)

Cell In[1], line 12

8 df.to_parquet("test.pq")

11 ds = Dataset.from_parquet("test.pq")

---> 12 tf_ds = ds.to_tf_dataset(columns=["r"], batch_size=2, shuffle=True)

File ~/venv/lib/python3.8/site-packages/datasets/arrow_dataset.py:411, in TensorflowDatasetMixin.to_tf_dataset(self, batch_size, columns, shuffle, collate_fn, drop_remainder, collate_fn_args, label_cols, prefetch, num_workers)

407 dataset = self

409 # TODO(Matt, QL): deprecate the retention of label_ids and label

--> 411 output_signature, columns_to_np_types = dataset._get_output_signature(

412 dataset,

413 collate_fn=collate_fn,

414 collate_fn_args=collate_fn_args,

415 cols_to_retain=cols_to_retain,

416 batch_size=batch_size if drop_remainder else None,

417 )

419 if "labels" in output_signature:

420 if ("label_ids" in columns or "label" in columns) and "labels" not in columns:

File ~/venv/lib/python3.8/site-packages/datasets/arrow_dataset.py:254, in TensorflowDatasetMixin._get_output_signature(dataset, collate_fn, collate_fn_args, cols_to_retain, batch_size, num_test_batches)

252 for _ in range(num_test_batches):

253 indices = sample(range(len(dataset)), test_batch_size)

--> 254 test_batch = dataset[indices]

255 if cols_to_retain is not None:

256 test_batch = {key: value for key, value in test_batch.items() if key in cols_to_retain}

File ~/venv/lib/python3.8/site-packages/datasets/arrow_dataset.py:2590, in Dataset.__getitem__(self, key)

2588 def __getitem__(self, key): # noqa: F811

2589 """Can be used to index columns (by string names) or rows (by integer index or iterable of indices or bools)."""

-> 2590 return self._getitem(

2591 key,

2592 )

File ~/venv/lib/python3.8/site-packages/datasets/arrow_dataset.py:2575, in Dataset._getitem(self, key, **kwargs)

2573 formatter = get_formatter(format_type, features=self.features, **format_kwargs)

2574 pa_subtable = query_table(self._data, key, indices=self._indices if self._indices is not None else None)

-> 2575 formatted_output = format_table(

2576 pa_subtable, key, formatter=formatter, format_columns=format_columns, output_all_columns=output_all_columns

2577 )

2578 return formatted_output

File ~/venv/lib/python3.8/site-packages/datasets/formatting/formatting.py:634, in format_table(table, key, formatter, format_columns, output_all_columns)

632 python_formatter = PythonFormatter(features=None)

633 if format_columns is None:

--> 634 return formatter(pa_table, query_type=query_type)

635 elif query_type == "column":

636 if key in format_columns:

File ~/venv/lib/python3.8/site-packages/datasets/formatting/formatting.py:410, in Formatter.__call__(self, pa_table, query_type)

408 return self.format_column(pa_table)

409 elif query_type == "batch":

--> 410 return self.format_batch(pa_table)

File ~/venv/lib/python3.8/site-packages/datasets/formatting/np_formatter.py:78, in NumpyFormatter.format_batch(self, pa_table)

77 def format_batch(self, pa_table: pa.Table) -> Mapping:

---> 78 batch = self.numpy_arrow_extractor().extract_batch(pa_table)

79 batch = self.python_features_decoder.decode_batch(batch)

80 batch = self.recursive_tensorize(batch)

File ~/venv/lib/python3.8/site-packages/datasets/formatting/formatting.py:164, in NumpyArrowExtractor.extract_batch(self, pa_table)

163 def extract_batch(self, pa_table: pa.Table) -> dict:

--> 164 return {col: self._arrow_array_to_numpy(pa_table[col]) for col in pa_table.column_names}

File ~/venv/lib/python3.8/site-packages/datasets/formatting/formatting.py:164, in <dictcomp>(.0)

163 def extract_batch(self, pa_table: pa.Table) -> dict:

--> 164 return {col: self._arrow_array_to_numpy(pa_table[col]) for col in pa_table.column_names}

File ~/venv/lib/python3.8/site-packages/datasets/formatting/formatting.py:185, in NumpyArrowExtractor._arrow_array_to_numpy(self, pa_array)

181 else:

182 zero_copy_only = _is_zero_copy_only(pa_array.type) and all(

183 not _is_array_with_nulls(chunk) for chunk in pa_array.chunks

184 )

--> 185 array: List = [

186 row for chunk in pa_array.chunks for row in chunk.to_numpy(zero_copy_only=zero_copy_only)

187 ]

188 else:

189 if isinstance(pa_array.type, _ArrayXDExtensionType):

190 # don't call to_pylist() to preserve dtype of the fixed-size array

File ~/venv/lib/python3.8/site-packages/datasets/formatting/formatting.py:186, in <listcomp>(.0)

181 else:

182 zero_copy_only = _is_zero_copy_only(pa_array.type) and all(

183 not _is_array_with_nulls(chunk) for chunk in pa_array.chunks

184 )

185 array: List = [

--> 186 row for chunk in pa_array.chunks for row in chunk.to_numpy(zero_copy_only=zero_copy_only)

187 ]

188 else:

189 if isinstance(pa_array.type, _ArrayXDExtensionType):

190 # don't call to_pylist() to preserve dtype of the fixed-size array

File ~/venv/lib/python3.8/site-packages/pyarrow/array.pxi:1475, in pyarrow.lib.Array.to_numpy()

File ~/venv/lib/python3.8/site-packages/pyarrow/error.pxi:100, in pyarrow.lib.check_status()

ArrowInvalid: Needed to copy 1 chunks with 0 nulls, but zero_copy_only was True

```

### Expected behavior

I think there are two potential issues/fixes

1. Proper handling of datetime UTC columns (perhaps there is something incorrect with zero copy handling here)

2. Not eagerly running against every column in a dataset when the columns argument of `to_tf_dataset` specifies a subset of columns (although I'm not sure if this is unavoidable)

### Environment info

- `datasets` version: 2.9.0

- Platform: macOS-13.2-x86_64-i386-64bit

- Python version: 3.8.12

- PyArrow version: 11.0.0

- Pandas version: 1.5.3

|

CLOSED

| 2023-02-01T20:47:33

| 2023-02-08T14:33:19

| 2023-02-08T14:33:19

|

https://github.com/huggingface/datasets/issues/5495

|

dwyatte

| 2

|

[

"bug",

"good first issue"

] |

5,494

|

Update audio installation doc page

|

Our [installation documentation page](https://huggingface.co/docs/datasets/installation#audio) says that one can use Datasets for mp3 only with `torchaudio<0.12`. `torchaudio>0.12` is actually supported too but requires a specific version of ffmpeg which is not easily installed on all linux versions but there is a custom ubuntu repo for it, we have insctructions in the code: https://github.com/huggingface/datasets/blob/main/src/datasets/features/audio.py#L327

So we should update the doc page. But first investigate [this issue](5488).

|

CLOSED

| 2023-02-01T19:07:50

| 2023-03-02T16:08:17

| 2023-03-02T16:08:17

|

https://github.com/huggingface/datasets/issues/5494

|

polinaeterna

| 4

|

[

"documentation"

] |

5,492

|

Push_to_hub in a pull request

|

Right now `ds.push_to_hub()` can push a dataset on `main` or on a new branch with `branch=`, but there is no way to open a pull request. Even passing `branch=refs/pr/x` doesn't seem to work: it tries to create a branch with that name

cc @nateraw

It should be possible to tweak the use of `huggingface_hub` in `push_to_hub` to make it open a PR or push to an existing PR

|

CLOSED

| 2023-02-01T18:32:14

| 2023-10-16T13:30:48

| 2023-10-16T13:30:48

|

https://github.com/huggingface/datasets/issues/5492

|

lhoestq

| 2

|

[

"enhancement",

"good first issue"

] |

5,488

|

Error loading MP3 files from CommonVoice

|

### Describe the bug

When loading a CommonVoice dataset with `datasets==2.9.0` and `torchaudio>=0.12.0`, I get an error reading the audio arrays:

```python

---------------------------------------------------------------------------

LibsndfileError Traceback (most recent call last)

~/.local/lib/python3.8/site-packages/datasets/features/audio.py in _decode_mp3(self, path_or_file)

310 try: # try torchaudio anyway because sometimes it works (depending on the os and os packages installed)

--> 311 array, sampling_rate = self._decode_mp3_torchaudio(path_or_file)

312 except RuntimeError:

~/.local/lib/python3.8/site-packages/datasets/features/audio.py in _decode_mp3_torchaudio(self, path_or_file)

351

--> 352 array, sampling_rate = torchaudio.load(path_or_file, format="mp3")

353 if self.sampling_rate and self.sampling_rate != sampling_rate:

~/.local/lib/python3.8/site-packages/torchaudio/backend/soundfile_backend.py in load(filepath, frame_offset, num_frames, normalize, channels_first, format)

204 """

--> 205 with soundfile.SoundFile(filepath, "r") as file_:

206 if file_.format != "WAV" or normalize:

~/.local/lib/python3.8/site-packages/soundfile.py in __init__(self, file, mode, samplerate, channels, subtype, endian, format, closefd)

654 format, subtype, endian)

--> 655 self._file = self._open(file, mode_int, closefd)

656 if set(mode).issuperset('r+') and self.seekable():

~/.local/lib/python3.8/site-packages/soundfile.py in _open(self, file, mode_int, closefd)

1212 err = _snd.sf_error(file_ptr)

-> 1213 raise LibsndfileError(err, prefix="Error opening {0!r}: ".format(self.name))

1214 if mode_int == _snd.SFM_WRITE:

LibsndfileError: Error opening <_io.BytesIO object at 0x7fa539462090>: File contains data in an unknown format.

```

I assume this is because there's some issue with the mp3 decoding process. I've verified that I have `ffmpeg>=4` (on a Linux distro), which appears to be the fallback backend for `torchaudio,` (at least according to #4889).

### Steps to reproduce the bug

```python

dataset = load_dataset("mozilla-foundation/common_voice_11_0", "be", split="train")

dataset[0]

```

### Expected behavior

Similar behavior to `torchaudio<0.12.0`, which doesn't result in a `LibsndfileError`

### Environment info

- `datasets` version: 2.9.0

- Platform: Linux-5.15.0-52-generic-x86_64-with-glibc2.29

- Python version: 3.8.10

- PyArrow version: 10.0.1

- Pandas version: 1.5.1

|

CLOSED

| 2023-01-31T21:25:33

| 2023-03-02T16:25:14

| 2023-03-02T16:25:13

|

https://github.com/huggingface/datasets/issues/5488

|

kradonneoh

| 4

|

[] |

5,487

|

Incorrect filepath for dill module

|

### Describe the bug

I installed the `datasets` package and when I try to `import` it, I get the following error:

```

Traceback (most recent call last):

File "/var/folders/jt/zw5g74ln6tqfdzsl8tx378j00000gn/T/ipykernel_3805/3458380017.py", line 1, in <module>

import datasets

File "/Users/avivbrokman/opt/anaconda3/lib/python3.9/site-packages/datasets/__init__.py", line 43, in <module>

from .arrow_dataset import Dataset

File "/Users/avivbrokman/opt/anaconda3/lib/python3.9/site-packages/datasets/arrow_dataset.py", line 66, in <module>